Artificial Intelligence (AI) Security Assessment

Artificial Intelligence (AI)

Security Assessment

Trusted Cyber Security Experts

25+ Years Industry Experience

Ethical, Professional & Pragmatic

An Artificial Intelligence (AI) Security Assessment helps you identify security weaknesses in your Large Language Model (LLM) which could allow an attacker to expose sensitive information, cause your LLM model to generate malicious responses or to reverse engineer your proprietary AI design.

Using Artificial Intelligence (AI) or Large Language Models (LLMs) for your organisation can present critical cyber security risks to your organisation’s data if they are not securely deployed and managed. AI security risks may relate to data leakage, adversarial manipulation, or the integrity of the underlying Large Language Model (LLM) being poisoned by a malicious user.

Have you had an AI Security Assessment?

If you have not conducted an AI security assessment or a Large Language Model (LLM) penetration test of your AI application, you could be unaware that it may be unintentionally revealing sensitive or proprietary information, which may either be embedded in AI training data or carried over from previous user interactions. Adversarial prompt injection is a significant concern to organisations that make use of Artificial Intelligence (AI), where attackers craft inputs to manipulate the model into disclosing confidential information or performing unintended actions. Our Artificial Intelligence (AI) Security Assessment helps identify if an attacker can poison datasets or alter your AI model’s behaviour – leading to biased, harmful, or malicious outputs.

The importance of LLM Penetration Testing

Our Large Language Model (LLM) penetration testing helps identify security weaknesses in your LLM APIs that could allow a malicious user to extract model parameters or reverse-engineer your LLM functionality. Identifying these vulnerabilities during an AI Security Assessment highlights the importance of robust AI security practices, including input sanitisation, secure API configurations, and stringent model access controls, to protect your organisation’s data in your LLM applications.

Team up with our AI Security Experts

Our CREST accredited penetration testers have extensive experience in conducting AI Security Assessments and penetration tests of bespoke Large Language Models (LLMs) for a wide range of customers. Using custom-written LLM penetration testing tools & scripts, combined with the latest OWASP Top 10 Security Vulnerabilities for Large Language Model (LLM) Applications & Generative Artificial Intelligence security guidance, our AI penetration testing team are equipped to identify the latest vulnerabilities that an attacker could use to compromise security flaws in your organisation’s Large Language Model (LLM) or Artificial Intelligence (AI) application.

What types of vulnerabilities can an Artificial Intelligence (AI) Security Assessment identify?

Conducting a targeted AI security assessment of your organisation’s Large Language Model (LLM) or AI application provides you with visibility of the security flaws that may be identified and exploited by a determined attacker. Some common security weaknesses that are often found during an Artificial Intelligence (AI) Security Assessment include:

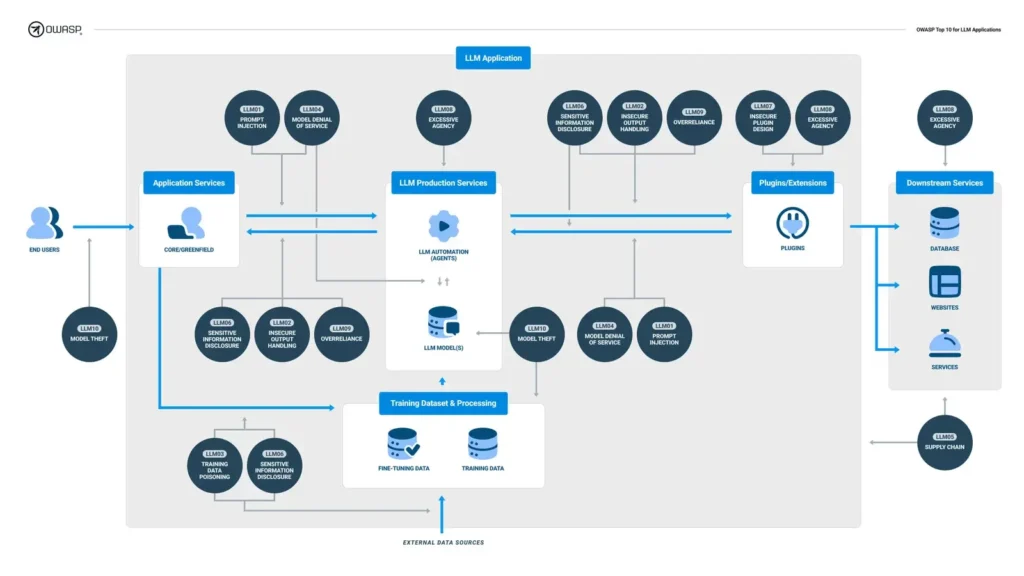

The following diagram from the Open Web Application Security Project (OWASP) highlights the areas that these common AI-related vulnerabilities may affect Large Language Model (LLM) applications:

Testing Process & Methodology

During an Artificial Intelligence (AI) Security Assessment or LLM penetration test, our consultants use a combination of automated tools & scripts and detailed manual testing of your AI application or Large Language Model (LLM). This allows us to efficiently & accurately test the LLM responses and the API endpoints through which it is accessed by your users. While automated tools can provide our team with a high-level overview of security flaws, much of our LLM testing process is manual and relies on us injecting requests into your LLM API and conducting an analysis on the responses that it returns.

All of our Large Language Model (LLM) penetration tests or security assessments of Artificial Intelligence (AI) applications are conducted in line with the OWASP Top 10 for LLM Applications and Generative AI. This ensures that we test for the very latest application-level LLM vulnerabilities through a recognised testing process.

We also ensure that our LLM penetration testing and AI security assessments are carried out in line with other globally-recognised standards, such as the Penetration Testing Execution Standard (PTES) and the Open Source Security Testing Methodology Manual (OSSTMM). In addition to this, as a member of the Council of Registered Ethical Security Testers (CREST), we conduct our testing and consultancy to the highest, industry-recognised standards and ethics.

Preparing for your AI Security Assessment

In order for us to perform an AI security assessment or a Large Language Model (LLM) penetration test, we will require the following prior to the test commencing:

- A signed & completed Testing Consent Form.

- The base URL that the in-scope LLM API endpoint(s) reside under.

- API description document (preferably in the OpenAPI Specification (OAS) (e.g. Swagger) format) of the API that applications or users access your Large Language Model (LLM) through.

- Two sets of credentials for each user role to be tested.

- A technical contact (e.g. LLM developer) who can provide our testing team with a walk-through of the various LLM interactions.

- If a Web Application Firewall (WAF) resides in front of the application, we will need this to be disabled or SecureTeam’s IP address range to be ‘whitelisted’ for the duration of the testing. This ensures that the WAF does not interfere with the testing and allows us to provide you with an accurate set of results.

Reporting

Our clear & concise penetration test reports enable everyone in your organisation to understand the vulnerabilities that have been identified and the real-world risk that your systems and data are exposed to.

Our AI security assessment reports include:

- A “board-level friendly” Executive Summary

- Comprehensive, evidence-based vulnerability reporting

- Risk-based vulnerability ranking with CVSS Scoring

- Technical references and links for further research by your technical team

Debrief Call

Once you have received our final report for your AI security assessment, you have the option of attending a conference call between the consultant(s) involved in delivering your project and individuals within your organisation who you feel would benefit from a more in-depth discussion of the report’s findings.

After Care

Once our consultancy engagement is complete and our final report has been delivered to you, our consultancy team remain available to you indefinitely for any questions you may have surrounding the report’s findings or our consultancy engagement with you.

Why Choose SecureTeam?

- UK-based Consultancy Team

- Customer Focused

- Security Vetted Consultants

- Ethical Scoping & Pricing

- 25+ Years Industry Experience

- ISO 9001 & 27001 Certified

- CREST Accredited

- Comprehensive Reporting

Ready to take your cyber security to the next level ?

Trusted Cyber Security Experts

As an organisation, SecureTeam has provided penetration testing and cyber security consultancy to public & private sector organisations both in the United Kingdom and worldwide. We pride ourselves in taking a professional, pragmatic and customer-centric approach, delivering expert cyber security consultancy – on time and within budget – regardless of the size of your organisation.

Our customer base ranges from small tech start-ups through to large multi-national organisations across nearly every sector – in nearly every continent. Some of the organisation’s who have trusted SecureTeam as their cyber security partner include:

Customer Testimonials

"Within a very tight timescale, SecureTeam managed to deliver a highly professional service efficiently. The team helped the process with regular updates and escalation where necessary. Would highly recommend"

IoT Solutions Group Limited Chief Technology Officer (CTO) & Founder

“First class service as ever. We learn something new each year! Thank you to all your team.”

Royal Haskoning DHV Service Delivery Manager

“We’ve worked with SecureTeam for a few years to conduct our testing. The team make it easy to deal with them; they are attentive and explain detailed reports in a jargon-free way that allows the less technical people to understand. I wouldn’t work with anyone else for our cyber security.”

Capital Asset Management Head of Operations

“SecureTeam provided Derbyshire's Education Data Hub with an approachable and professional service to ensure our schools were able to successfully certify for Cyber Essentials. The team provided a smooth end-to-end service and were always on hand to offer advice when necessary.”

Derbyshire County Council Team Manager Education Data Hub

“A very efficient, professional, and friendly delivery of our testing and the results. You delivered exactly what we asked for in the timeframe we needed it, while maintaining quality and integrity. A great job, done well.”

AMX Solutions IT Project Officer

“We were very pleased with the work and report provided. It was easy to translate the provided details into some actionable tasks on our end so that was great. We always appreciate the ongoing support.”

Innovez Ltd Support Officer

"SecureTeam have provided penetration testing for our system since 2021, and I cannot recommend them enough. The service is efficient & professional, and the team are fantastic to work with; always extremely helpful, friendly, and accommodating."

Lexxika Commercial DirectorGet in touch today

If you’d like to see how SecureTeam can take your cybersecurity posture to the next level, we’d love to hear from you, learn about your requirements and then send you a free quotation for our services.

Our customers love our fast-turnaround, “no-nonsense” quotations – not to mention that we hate high-pressure sales tactics as much as you do.

We know that every organisation is unique, so our detailed scoping process ensures that we provide you with an accurate quotation for our services, which we trust you’ll find highly competitive.

Get in touch with us today and a member of our team will be in touch to provide you with a quotation.

Subscribe to our monthly newsletter today

If you’d like to stay up-to-date with the latest cyber security news and articles from our technical team, you can sign up to our monthly newsletter.

We hate spam as much as you do, so we promise not to bombard you with emails. We’ll send you a single, curated email each month that contains all of our cyber security news and articles for that month.

“We were very impressed with the service, I will say, the vulnerability found was one our previous organisation had not picked up, which does make you wonder if anything else was missed.”

Aim Ltd Chief Technology Officer (CTO)